How good can A.I.-generated writing be when it’s designed to sound like everything else out there?

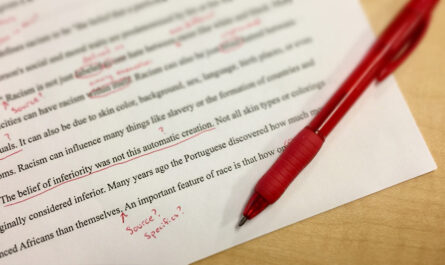

Several months ago, I got my hands on the short quiz The Atlantic uses to prescreen copyediting candidates and ran it through ChatGPT 3.5. I was impressed with the results: it was able to catch about half of the 20 or so errors deliberately seeded throughout the text. Half isn’t good enough to make it as a professional copy editor, of course—not even close—but it caught some errors most humans would miss, and I can imagine that, in a few years, it will be able to correct most or all typos in a given text.

If catching typos were all took to make average writing great, that might be sufficient—but it isn’t, and it’s not.

Some major platforms have had A.I.-powered copyediting tools built right into them for years, notably the grammar check in Microsoft Word. And now, Axios suggests ways to “improve” your writing as you type, as do Gmail and Outlook. Perhaps you too have had your writerly pride injured by a robotic suggestion to change your use of “quite acceptable” to “acceptable”—as if they meant the same thing!

But A.I. no longer simply offers unsolicited advice of dubious value—it also invents entire passages from the whole cloth. I’ve been working with A.I.-generated text for several weeks now, and the more I see of it, the more dismal it looks. It’s uncanny how closely ChatGPT can simulate human writing, but it’s a very indistinct and superficial kind of writing. Wade through enough A.I.-generated writing and it begins to feel flat and samey and, well, robotic.

And while A.I. is constantly improving, I’m not sure that it will ever reach the level of skill that a human writer or editor can bring to the job.

Large language models are powered by probabilities, after all, which makes it unlikely that they’ll ever be able to conjure up something truly original and surprising.

Worse, the larger the corpus an LLM is trained on, the more likely it is to represent average-quality writing. As Sturgeon’s Law famously put it, “Ninety percent of everything is crud.” So if you’re training an LLM on what’s already out there, you can expect your input to be ninety percent awful. (One can only imagine what kind of writing will emerge when LLMs are trained on texts produced by other LLMs and the snake begins to eat its own tail.)

But we’re not aiming for average quality, are we? The goal is always to be the best, not to sound indistinguishable from the average writer, making average mistakes and producing average results. As readers abandon search engines and A.I. makes it possible to produce a virtually infinite amount of content on demand, publications will no longer be able to distinguish themselves or compete based on the quantity of content they produce. Only consistently high quantity builds loyal readerships.

And the only way to produce consistently high-quality content is to hire the writers and editors capable of creating it. Running it through an LLM specifically designed to make it sound more like everything else out there can only produce mediocre results.

But I don’t expect you to take my word for it. Let’s look at some examples!

The President’s surgery went smoothly and she is expected to make a full recovery, as per White House sources.

I can only imagine that ChatGPT produces sentences like this because millions of writers have already made the same mistake, but the fact is that “as per” ≠ “per.”

Roughly speaking, “per” means “according to” (at least when it’s used in an attribution); “per White House sources” is correct.

But “as per” suggests something more like “in accordance with”—as in the sentence “I always shovel my sidewalk within 24 hours of snowfall, as per homeowners association regulations.”

Using the wrong form just sounds a bit off, and when enough of these kinds of errors pile up—even if readers don’t consciously register them—it erodes a publication’s readability and credibility.

Vitamin supplements are growing in popularity, with vitamin C being one of the most popular.

I’ve written about this before—you can’t just stick “with” and a gerund on the end of a sentence and call it a modifying clause. A well-formed participial phrase begins with a participle, not a preposition (and even well-formed ones should be used sparingly). ChatGPT uses this construction all the time, no doubt taking its cues from un- or poorly copyedited writing.

At the risk of giving away one of my trade secrets: I read everything I copyedit out loud to myself so I can hear how it sounds. And every time I reach a “with” + noun + gerund construction like this, my hackles rise.

The ongoing conflict underscores the necessity for leaders to adjust to unstable situations.

Again, this statement might look reasonable at first glance. But by the hundredth time you’ve witnessed ChatGPT use the word “underscores” to describe the relationship between two things, it becomes all you can see. And if things aren’t “underscoring” things, they’re “highlighting” them; ChatGPT is obsessed with things “underscoring” and “highlighting” each other to the exclusion of all other words, as if the universe were powered by emphasis alone.

Both of these words have specific meanings, but ChatGPT throws them around like conjunctions—likely thanks to their ability to imply some kind of vague correlation that almost makes sense, if you don’t think about it too hard.

What does it mean to “underscore the necessity” of something? A better way of phrasing this might be “The persistent conflict makes it necessary for leaders to adjust to unstable situations.” That’s still not a very good sentence, but at least causality is now stated outright, rather than implied.

Sad to say, ChatGPT makes errors like these all the time, and as long as it’s trained on writing that includes them, it will probably continue to do so. But even if its writing were grammatically correct, it would still lack a distinctive voice. ChatGPT can only mimic what it has already read, and so much of what’s out there is not worth imitating.

Bonus: Would you believe I’ve found enough examples of bad A.I. writing to warrant a whole ’nother post?

very true and I have noted its an issue with all these AIs including DeepSeek they are so obsessed with underscore, highlight and empasize.